Understanding the problem(s)

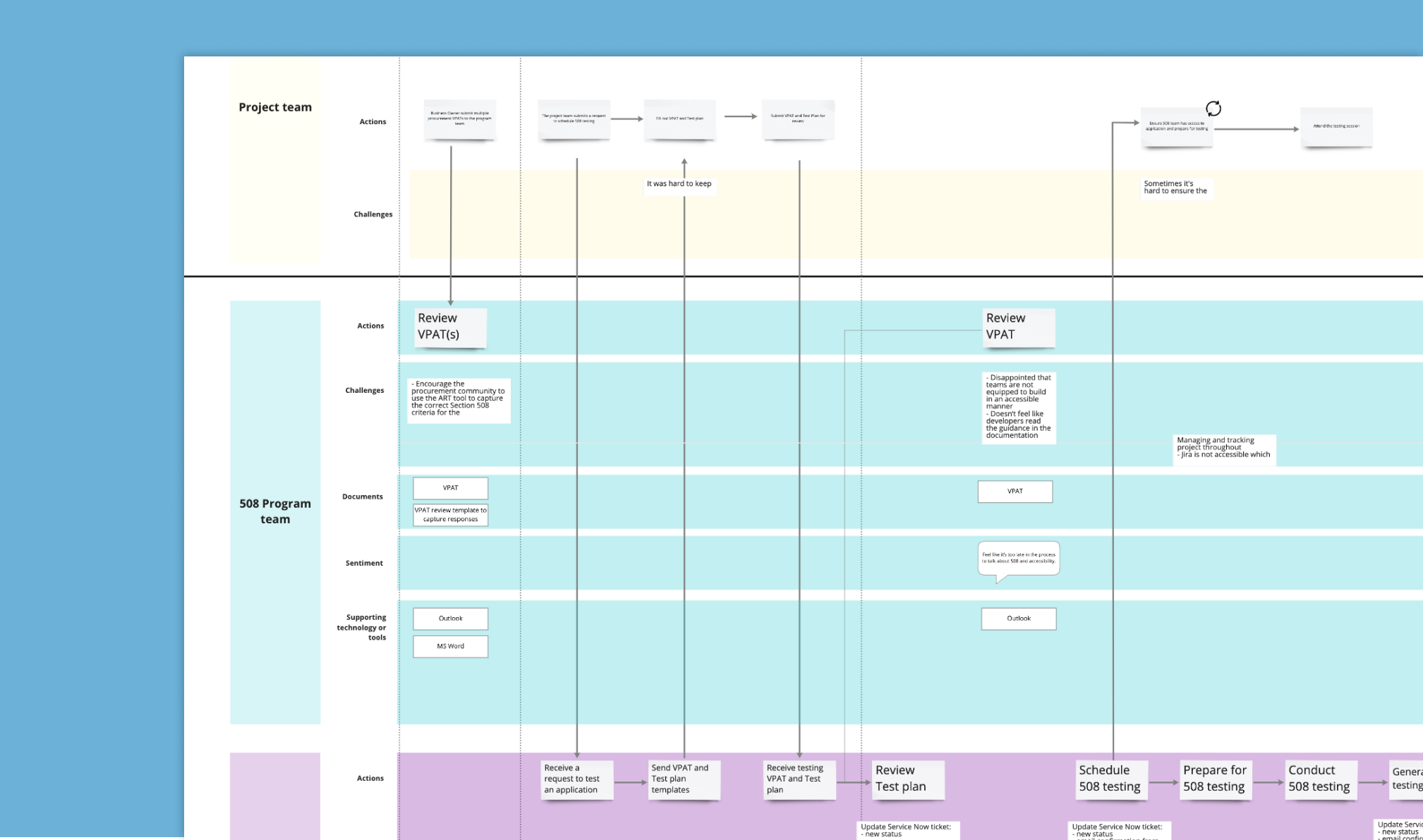

Through our discovery we learned that the 508 process is actually quite enjoyable for project teams. The 508 team is responsive, kind, and knowledgeable – qualities that are sometimes lacking in other CMS processes. When speaking with the back office, they also tended to lean towards sharing the positives of their process, with few criticisms of their current process. Some concerns that they did share were:

- Since their teams are split between feds (program team) and contractor (testing team), information and documents were siloed, leading to a lot of email back and forth

- There is no visibility into projects “In Remediation”, which is a significant compliance issue for CMS

After consistently probing with project teams, we learned they wanted more visibility into where they are in the process and the ability to more easily reuse documentation, as the VPAT and Test Plan can be time-consuming.

Sample service blueprint with project teams submitting a 508 request to the back office

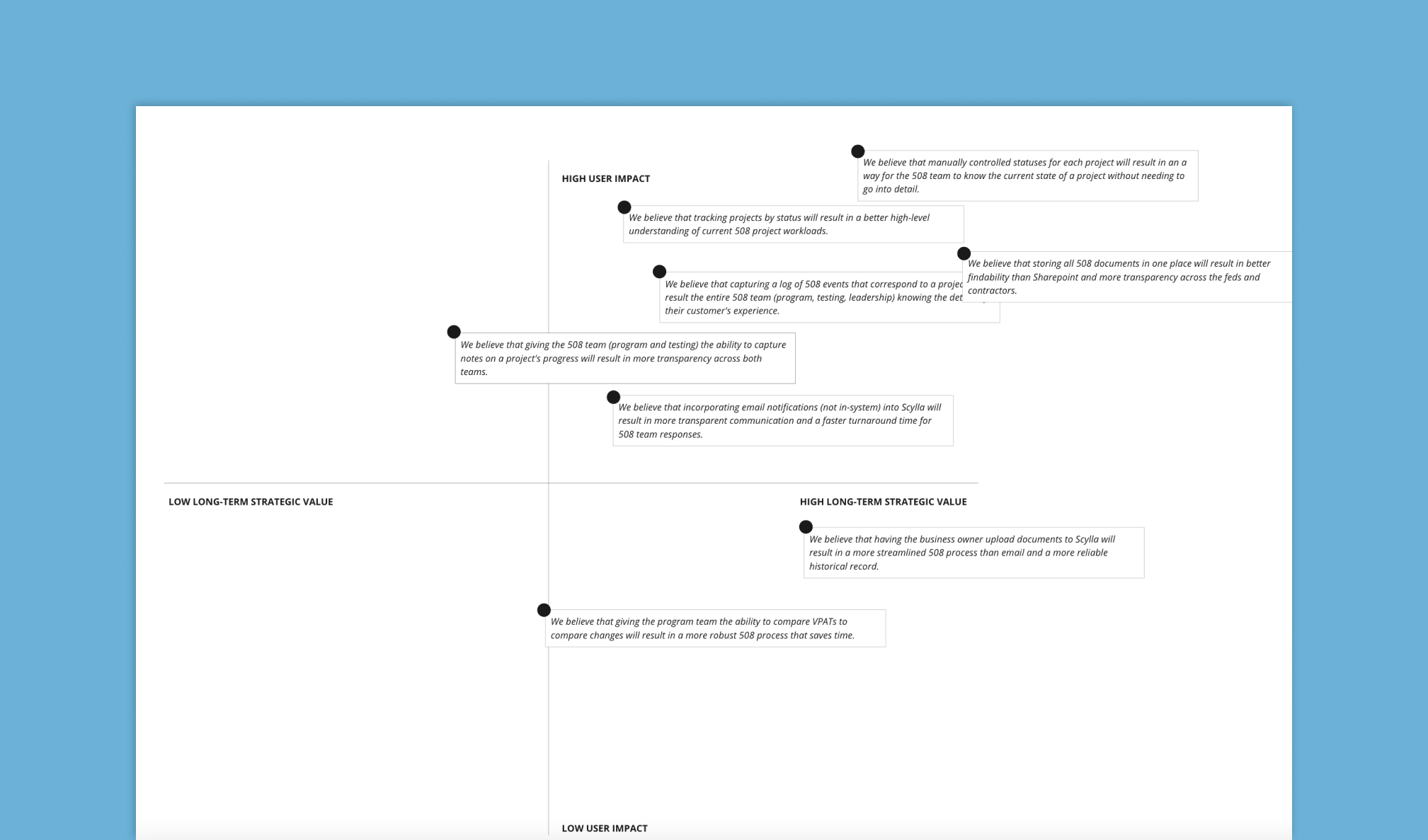

This led us to testing a few concepts:

1. Access to all VPATs

2. Document storage and access

3. Keeping track of projects

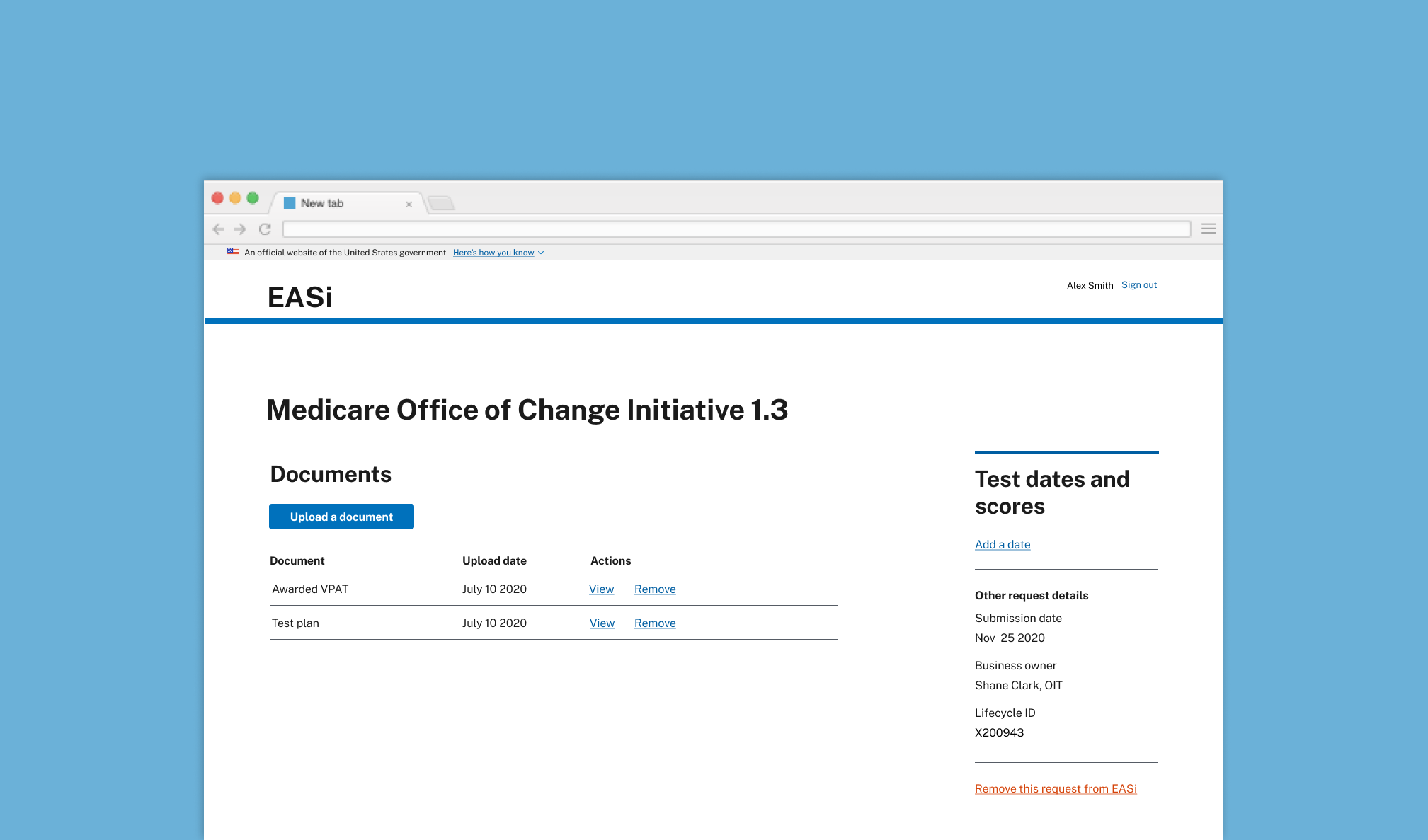

Sample concept test we out in front of users during Discovery

Our concept testing sessions focused on the back office for the 508 workflow, mostly due to our learnings with IT governance and looping their back office team too late in the process. Again, for all governance processes, we strongly encourage you to focus on the back office experience first. The storyboards that resonated the most were “Document storage and access” and “Keeping track of projects” so we moved to building a prototype in code.

How we prioritized functionality to build and test further

The design team paired with an engineer to build the prototype in code so that we could test usability more effectively. With testing in code, we were also able to more accurately scope out functionality and only take on work that was feasible. If you're curious, feel free to take a look at one of our coded prototypes.

Overall, we learned we were moving in the right direction and that storing documents and tracking statuses provided the back office team significant value.

A snapshot of our Dovetail organization

How we solved it

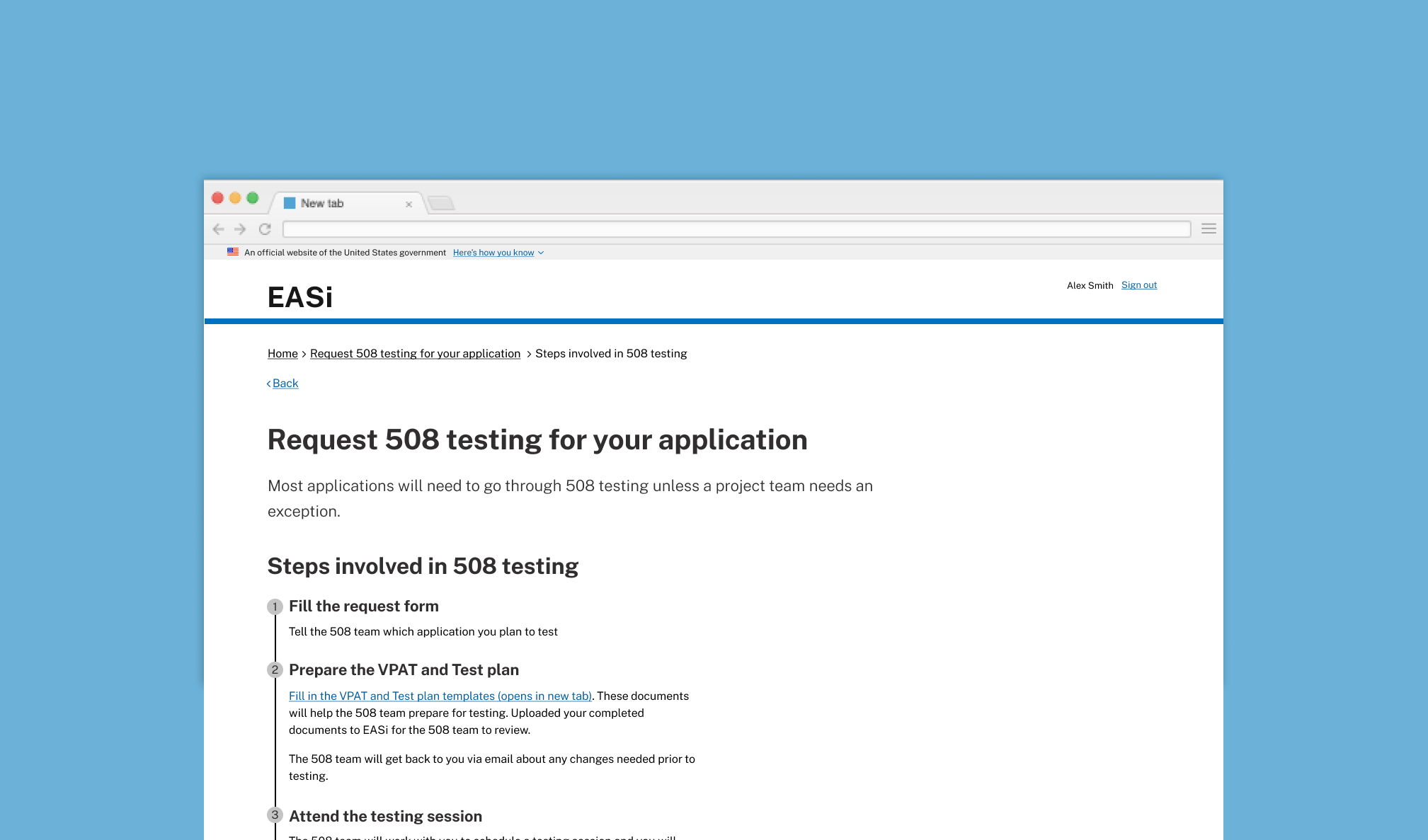

Based on our concept testing results, our engineering team went ahead and started building the document upload functionality for the back office. Based on the back office product, we were able to appropriately scope the customer experience and start testing how the end user would interact with the service. We again leaned heavily on help and guidance to inform folks on the importance of 508 testing and what they can expect from the process.

We continued to usability test the back office experience as well, layering on capabilities to track statuses and manage dates & test scores. This helped the back office move away from managing their 508 testing in four different places, and also created a usable tool for their team members who have access needs.

Sample help and guidance for 508 testing requests. See it in Figma.

Learnings

We can learn a lot more about our users and the strength of our concepts when they remain abstract for the first few rounds of testing. By using a few different storyboards as provocations, we were able to more confidently understand high value use cases for our concepts, rather than drill down on the flow of a clickthrough app. This made moving from sketch to code so much easier as we spent less time questioning if specific functionality was valuable, and instead were able to ensure it was usable.

This was also my first time building accessibly from the start of a project. Our discovery activities were all made accessible (mostly word docs), the concept tests were adapted into a conversation for our users with access needs, and our usability tests were run with a screen reader when appropriate. I paired with our engineers on checking completion of stories, including if they passed our accessibility standards. I still have a lot to learn about accessibility, but this project was such a wonderful experience to build empathy and ensure what we are building is equitable to all people, regardless of abilities.